Encryption-level isolation

Encryption-level isolation serves as a robust and often indispensable layer of security in a multi-tenant architecture. While other forms of isolation such as network-, database-, and application-level isolation focus on segregating data and computational resources, encryption-level isolation aims to secure the data itself. This is particularly crucial when dealing with sensitive information that, if compromised, could have severe repercussions for both the tenants and the service provider. In this context, encryption becomes not just a feature but a necessity. Key approaches for encryption-level isolation are explained in the following sections.

Unique keys for each tenant

One of the most effective ways to implement encryption-level isolation is through the use of AWS KMS. What sets KMS apart in a multi-tenant environment is the ability to use different keys for different tenants. This adds an additional layer of isolation, as each tenant’s data is encrypted using a unique key, making it virtually impossible for one tenant to decrypt another’s data.

The use of tenant-specific keys also facilitates easier management and rotation of keys. If a key needs to be revoked or rotated, it can be done without affecting other tenants. This is particularly useful in scenarios where a tenant leaves the service or is found to be in violation of terms, as their specific key can be revoked without disrupting the encryption for other tenants.

Encryption for shared resources

In a multi-tenant environment, there are often shared resources that multiple tenants might access. These could be shared databases, file storage systems, or even cache layers. In such scenarios, using different tenant-specific KMS keys for encrypting different sets of data within these shared resources can provide an additional layer of security.

For instance, in a shared database, each tenant’s data could be encrypted using their unique KMS key. Even though the data resides in the same physical database, the encryption ensures that only the respective tenant, who has the correct key, can decrypt and access their data. This method effectively isolates each tenant’s data within a shared resource, ensuring that even if one tenant’s key is compromised, the data of other tenants remains secure.

Hierarchical keyring

The concept of a hierarchical keyring offered by KMS adds another layer of sophistication and structure to ensure robust encryption practices in a scalable multi-tenant environment. In this model, a master key is used to encrypt tenant-specific keys. These tenant-specific keys are then used to encrypt the data keys that secure individual pieces of data.

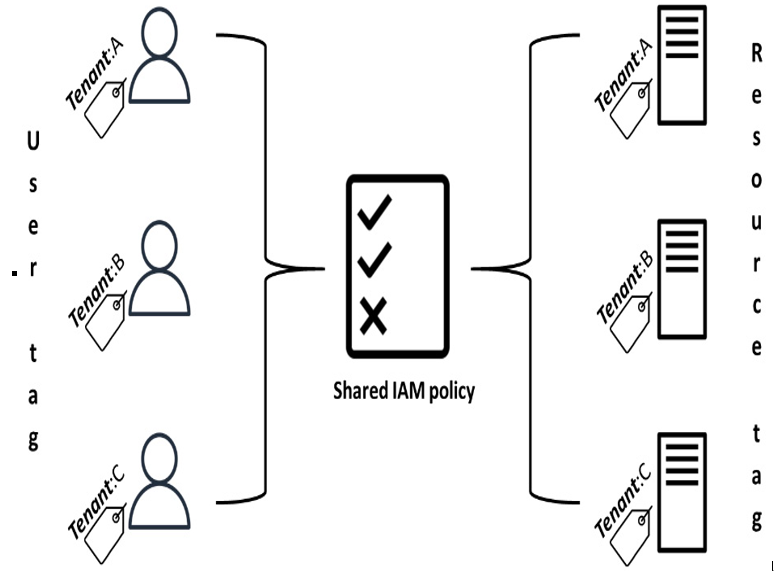

This hierarchical approach simplifies key management by allowing lower-level keys to be changed or rotated without affecting the master key. It also enables granular access control by allowing IAM policies to be tailored to control access to different levels of keys. For example, you could configure an IAM policy that allows only database administrators to access the master key, while another policy might allow application-level services to access only the tenant-specific keys. Yet another policy could be set up to allow end users to access only the data keys that are relevant to their specific tenant. This ensures that only authorized entities have access to specific keys.

Additionally, the hierarchical nature of the keys makes the rotation and auditing processes more straightforward. Keys can be rotated at different levels without affecting the entire system, as you can change tenant-specific or data keys without needing to modify the master key. Each level of the key hierarchy can have its own set of logging and monitoring rules, simplifying compliance and enhancing security.

In conclusion, achieving secure data isolation in a multi-tenant environment is a multi-layered challenge that demands a holistic approach. From network-level safeguards to application-level mechanisms and encryption strategies, every layer plays a pivotal role in ensuring that each tenant’s data remains isolated and secure.